Logistic Regression with Jupyter Notebook Link to heading

3. Hyperparameter Tuning Link to heading

Understanding Hyperparameters Link to heading

C: Regularization Strength The parameter C controls the regularization strength in logistic regression. It is the inverse of regularization strength; smaller values specify stronger regularization. Regularization helps prevent overfitting by penalizing large coefficients.

solver: Optimization Algorithm The solver parameter specifies the algorithm to use for optimization. Common choices include:

- newton-cg, lbfgs, liblinear: Suitable for small datasets.

- sag, saga: Efficient for large datasets.

- max_iter: Maximum Number of Iterations The max_iter parameter sets the maximum number of iterations the solver will use. This is useful for solvers that iterate to converge to a solution.

Training with Cross-Validation Link to heading

Performing cross-validation helps in finding the best parameters for your logistic regression model. Cross-validation divides the dataset into multiple folds and trains the model on different combinations of these folds, ensuring the model generalizes well on unseen data. Here’s how to perform cross-validation with GridSearchCV in scikit-learn:

Setting Up Cross-Validation Link to heading

- Define the Parameter Grid: Create a dictionary with parameters and their respective values to test.

from sklearn.model_selection import GridSearchCV

from sklearn.linear_model import LogisticRegression

# Define the parameter grid

param_grid = {

'C': [0.1, 1, 10, 100],

'solver': ['newton-cg', 'lbfgs', 'liblinear'],

'max_iter': [50, 100, 200, 300]

}

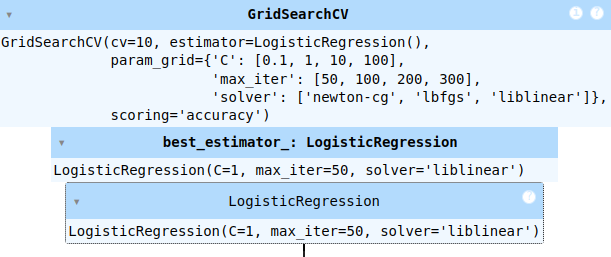

- Initialize GridSearchCV: Set up GridSearchCV with the logistic regression model and the parameter grid.

# Initialize the GridSearchCV

grid_search = GridSearchCV(LogisticRegression(), param_grid, cv=5, scoring='accuracy')

- Fit the Model: Perform cross-validation on the training data to find the best parameters.

# Fit the model

grid_search.fit(X_train, y_train)

- Select the Best Parameters: After fitting, retrieve the best parameters and use the best model for predictions.

# Best parameters

print("Best Parameters:", grid_search.best_params_)

# Best model

best_model = grid_search.best_estimator_

# Evaluate the best model

y_pred_best = best_model.predict(X_test)

print("Best Model Accuracy:", accuracy_score(y_test, y_pred_best))

Best Parameters: {'C': 1, 'max_iter': 50, 'solver': 'liblinear'}

Best Model Accuracy: 0.9090909090909091

This process ensures that the model is tuned for optimal performance and generalizes well to new data.